Abstract

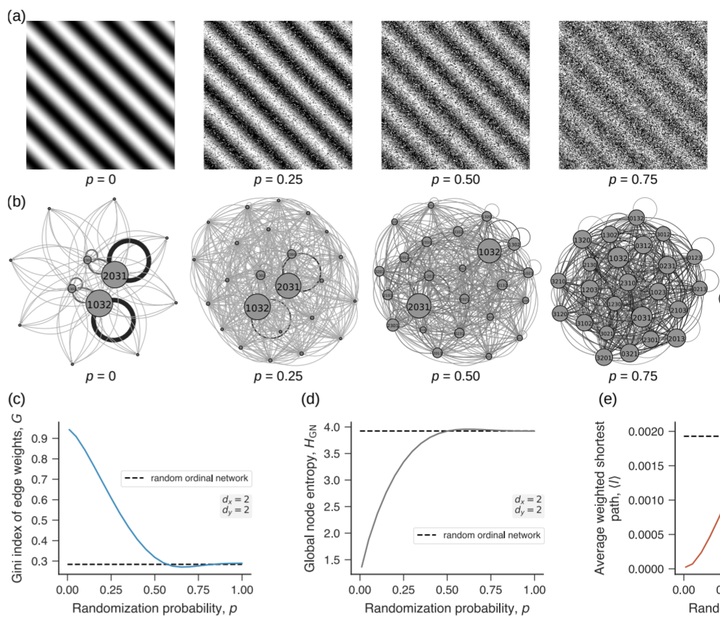

An increasing abstraction has marked some recent investigations in network science. Examples include the development of algorithms that map time series data into networks whose vertices and edges can have different interpretations, beyond the classical idea of parts and interactions of a complex system. These approaches have proven useful for dealing with the growing complexity and volume of diverse data sets. However, the use of such algorithms is mostly limited to one-dimension data, and there has been little effort towards extending these methods to higher-dimensional data such as images. Here we propose a generalization for the ordinal network algorithm for mapping images into networks. We investigate the emergence of connectivity constraints inherited from the symbolization process used for defining the network nodes and links, which in turn allows us to derive the exact structure of ordinal networks obtained from random images. We illustrate the use of this new algorithm in a series of applications involving randomization of periodic ornaments, images generated by two-dimensional fractional Brownian motion and the Ising model, and a data set of natural textures. These examples show that measures obtained from ordinal networks (such as average shortest path and global node entropy) extract important image properties related to roughness and symmetry, are robust against noise, and can achieve higher accuracy than traditional texture descriptors extracted from gray-level co-occurrence matrices in simple image classification tasks.